Comparison of various deep research searches

Open Knowledge?

A couple of weeks ago I did another community hangout on AI, and I focused it on Deep Research. To my delight long time friend and collaborator Kevin Feyen showed up as well as David Wiley. As good conversations go, we ended up not just talking about deep research tools but the wider implication for OER and the state of Generative AI itself.

The three tools I chose that day were Perplexity Deep Research, Google Deep Research, and an open source deep research agent that uses the Serp API for search.

A video summary is here: https://somup.com/cTnThw7hM4

I used the same prompt for all three searches, and as close as I could the same parameters ie depth and scope.

Essentially, all of them seem to perform web searches and read those pages, then combine with their llm functionality into a cohesive summary. Some can do it better or faster but that’s the point of technology races.

Perplexity

I’ve enjoyed perplexity (free) for a while as a more innovative product of LLM’s when it first came out. But I hadn’t used deep research much yet, and appreciated the results.

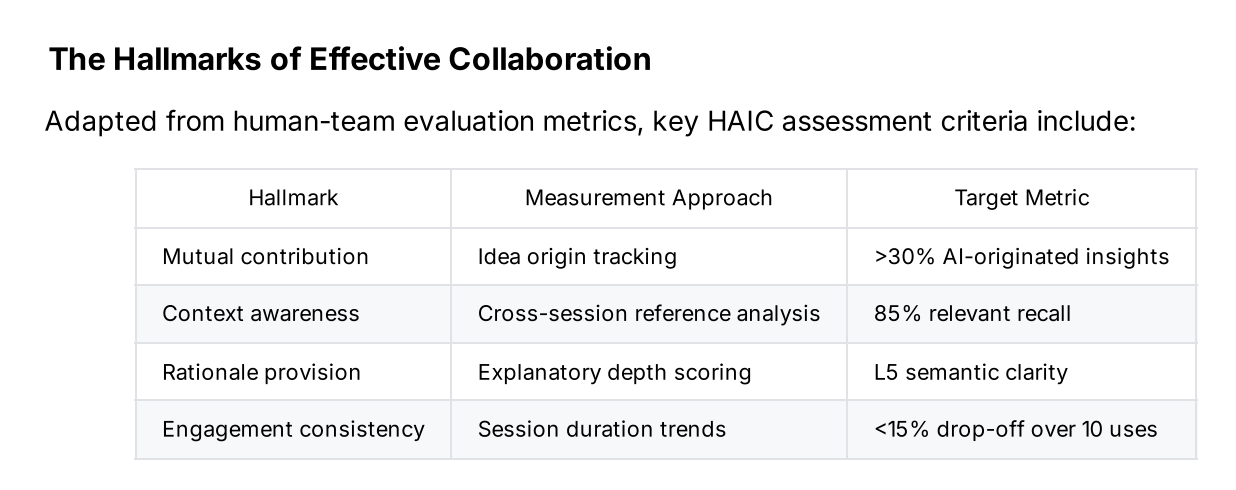

The report was nicely formatted with a table even that I couldn’t find on any of the sources it listed at the end of the report.

It was pretty fast and seemed to do a good job balancing situational context with technical information. Link to report.

Google

Google has been my favorite AI vendor for a while, but much of the really good stuff has been hidden in AI studio where most non-developers wouldn’t feel comfortable going to (Live View etc). But Deep Research is out there. Again, if they’re trying to build something they want Joe Everyman to use, they should worry less about decimal points and models and instead focus on what the model does I think, but at least it’s more intuitive than OpenAI’s interface of having to select it from the regular chat as an additional factor.

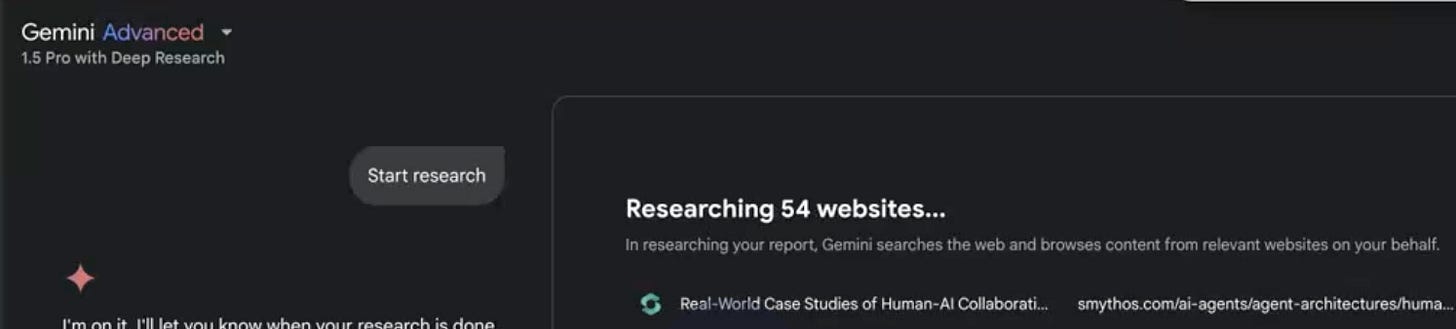

The report asked me to verify it’s plan of course, then off it went. I love how it’s showing you the sources it’s using as it’s compiling; I would love the ability to guide it as I see them eg (less healthcare, more education” but that’s probably wishful thinking. The report was formatted, but pretty plainly and didn’t automatically include sources.

The final report of course seamlessly goes to a google doc which you can see here. I’ve been more impressed with Deep Research in the past, so maybe I just got it on an off day. A previous report it ran for me about how it felt there were a lot more plane crashes recently is here to see the normal fuller quality.

Local/Open Source

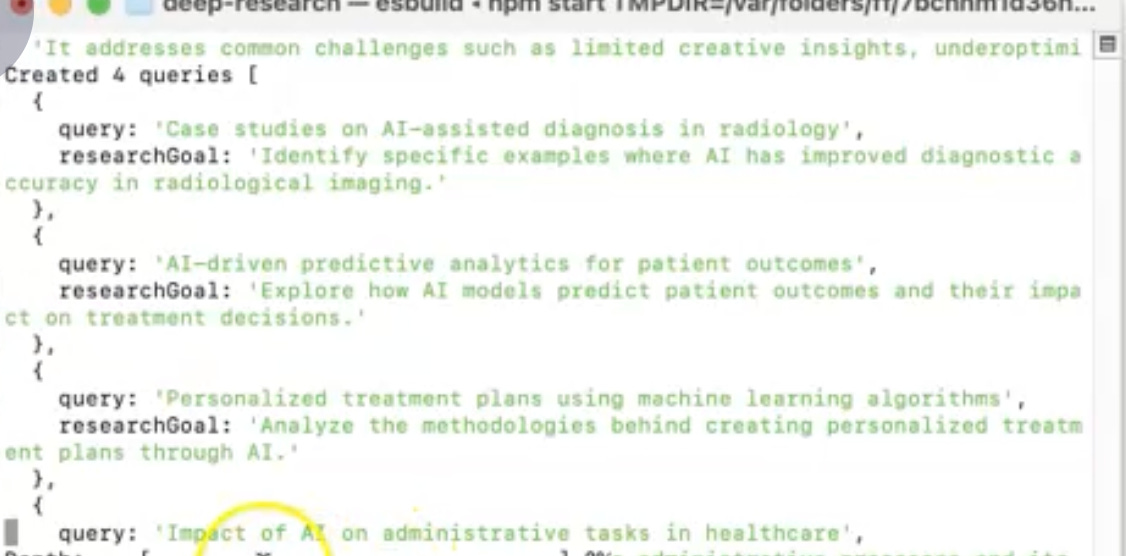

I saw a Github repository advertised on twitter for a deep research platform that only needed a SERP API key which I already have and relatively simple set up. I couldn’t resist for this demo to also have it use a local LLM (in this case I used llama3-gradient:8b running in Ollama).

IT was markedly slower but I also gave it higher parameters - eventually I timed out on my request limits… whups. But it still generated a high quality report in Markdown that saved locally. It was very similar to Google’s deep research links in style as shown here. But also for free and all open source, perhaps worth the risk to be able to see more coherently where it’s getting it’s information from?

Output of full report here; I should re-run it and see if lower parameters will produce something useful to be fair. But I’ve used it for other searches lately and it does seem to get different results than Google Deepmind at least in general.

Overall Implications

As we use AI for more of everything, I’m concerned about high quality niche sources never getting found. Not just when it comes to OER, but for example when I search “how to teach fractions research” I use to get links to high quality learning resources, now I usually get more commercial minded sites and not things like links to the Progressions documents.

Thus, open source LLM’s are important not just for transparency behind the hood of the LLM, but when LLM’s are used like this potentially for power/misinformation - if Google decided to not show it’s sources as it was searching, there’s nothing any of us could do about it. And that’s my concern and why I’m fully committed to using open LLM systems whenever/wherever I can.

It’s been expected that spammers would be trying to get promotional and worse results into LLM results and if you are seeing it that’s significant. I wonder how it works.