DeepseekR1 (llama distillation)

Open source AI from China?

Much has been made about DeepSeekR1, the open source model (maybe trained on OpenAI data/models).

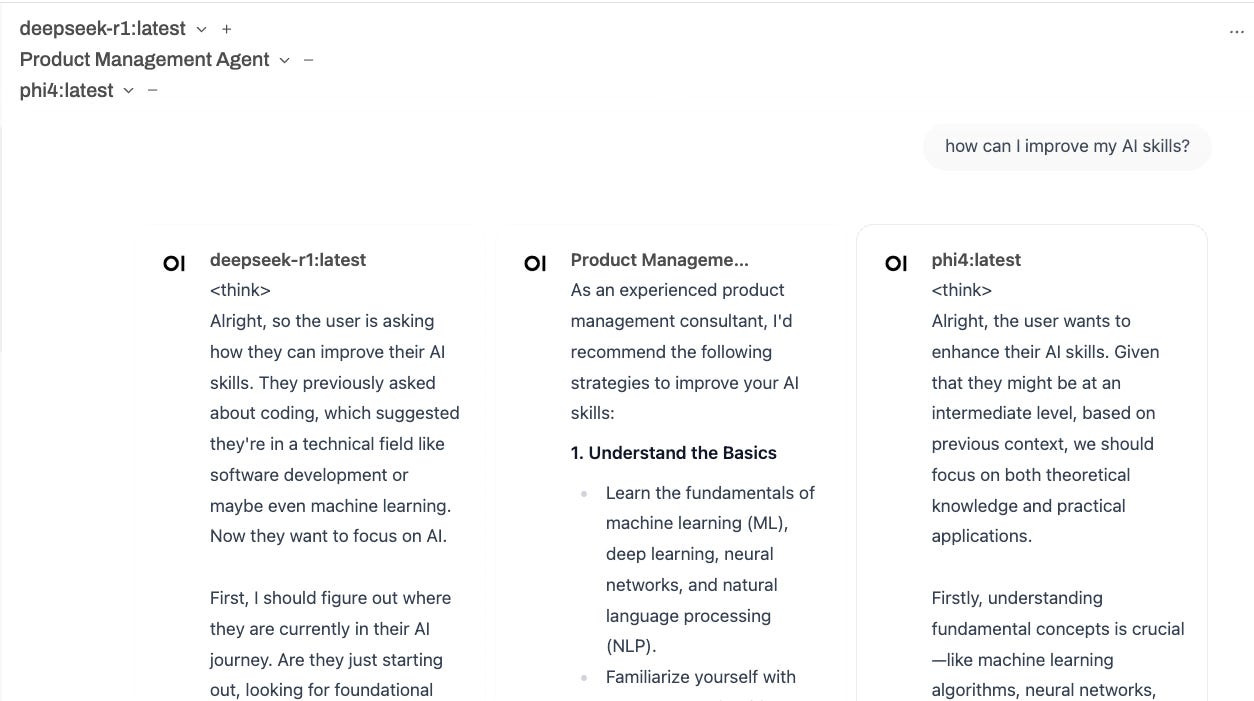

This post is focused on my experiences using the local version available on Ollama.com and viewable here in Open WebUI.

Overall, the chain of thought is pretty good. After adjusting some things like context length - normally I like to have good system prompts for various purposes (the Product Management Model you see below has an extensive system prompt for product management), but on huggingface I saw that you shouldn’t give it a good system prompt as it was trained to be a blank canvas for it’s internal thoughts.

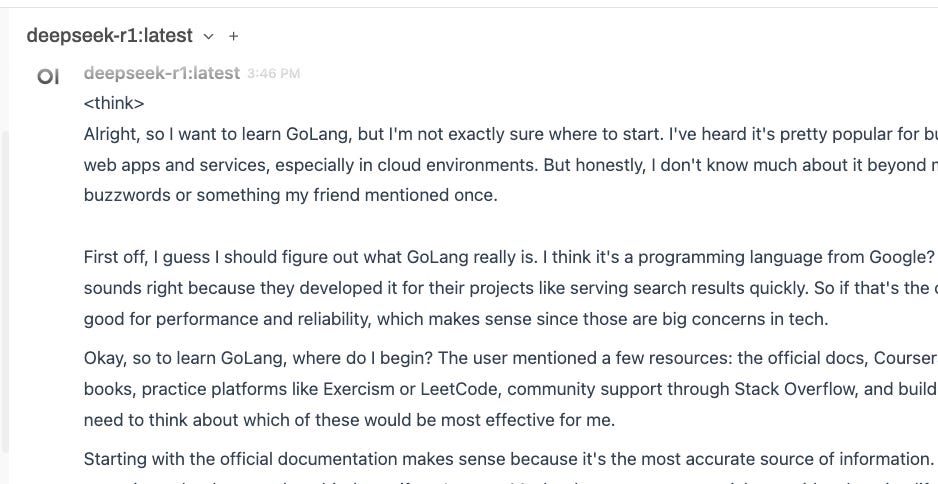

Another good example showing it’s internal thinking when I asked the distilled model about its origins… hmm.

Practical comparison between local (qwen) deepseek and O1 Pro (reasoning)

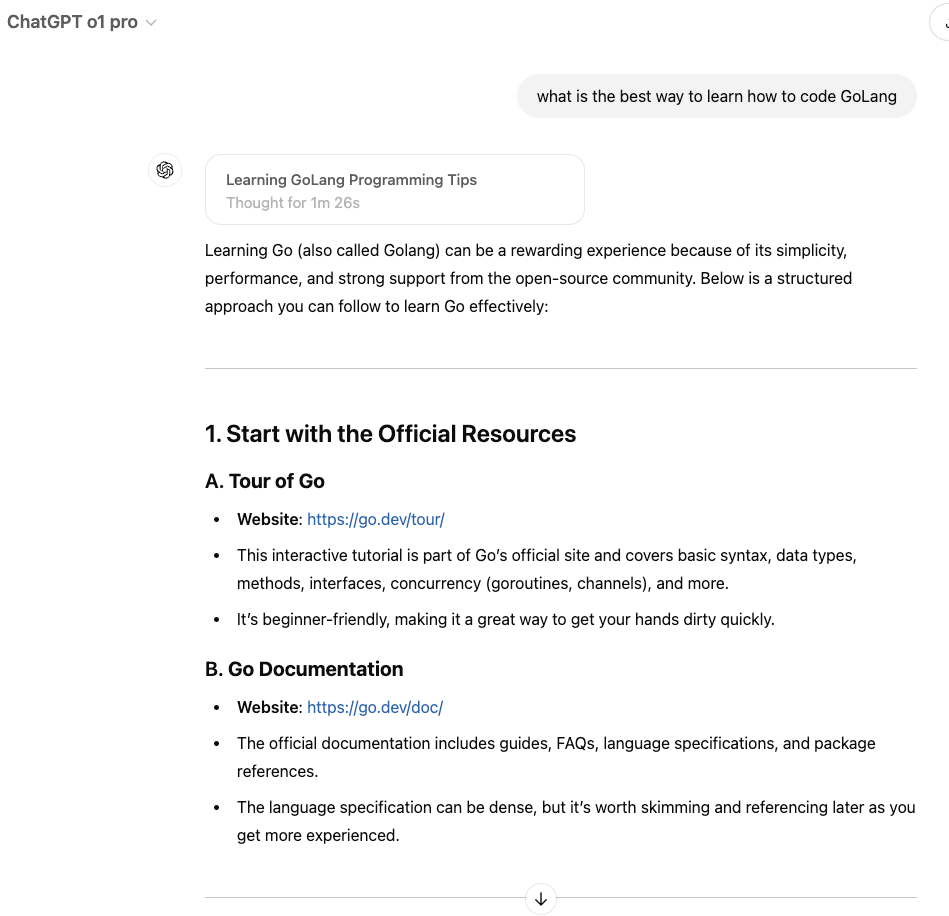

I asked it a fairly simple question about how to learn something (Go Lang). I wanted to see how It’s reasoning compared to OpenAI’s O1 Pro.

Deepseek:

O1 Pro:

Honestly here, they were remarkably similar. They both focused on official documentation as opposed to (what I was hoping) first see how much programming knowledge I had as a jumping off point for example. O1 was more practical in sending me official websites, but O1’s response was much deeper. (Perhaps I could have tuned Deepseek’s response window but I kept it all default values for this).

Verdict

I have played a bit with the web version of Deepseek, but wouldn’t dare trust it with business or personal secrets. The official API is cumbersome to use with too low of a rate limit (as of this writing) to be useful for production applications.

Im most excited about the mixture of experts approach it took to training and usage. (Good explanation here although information is still forthcoming.)